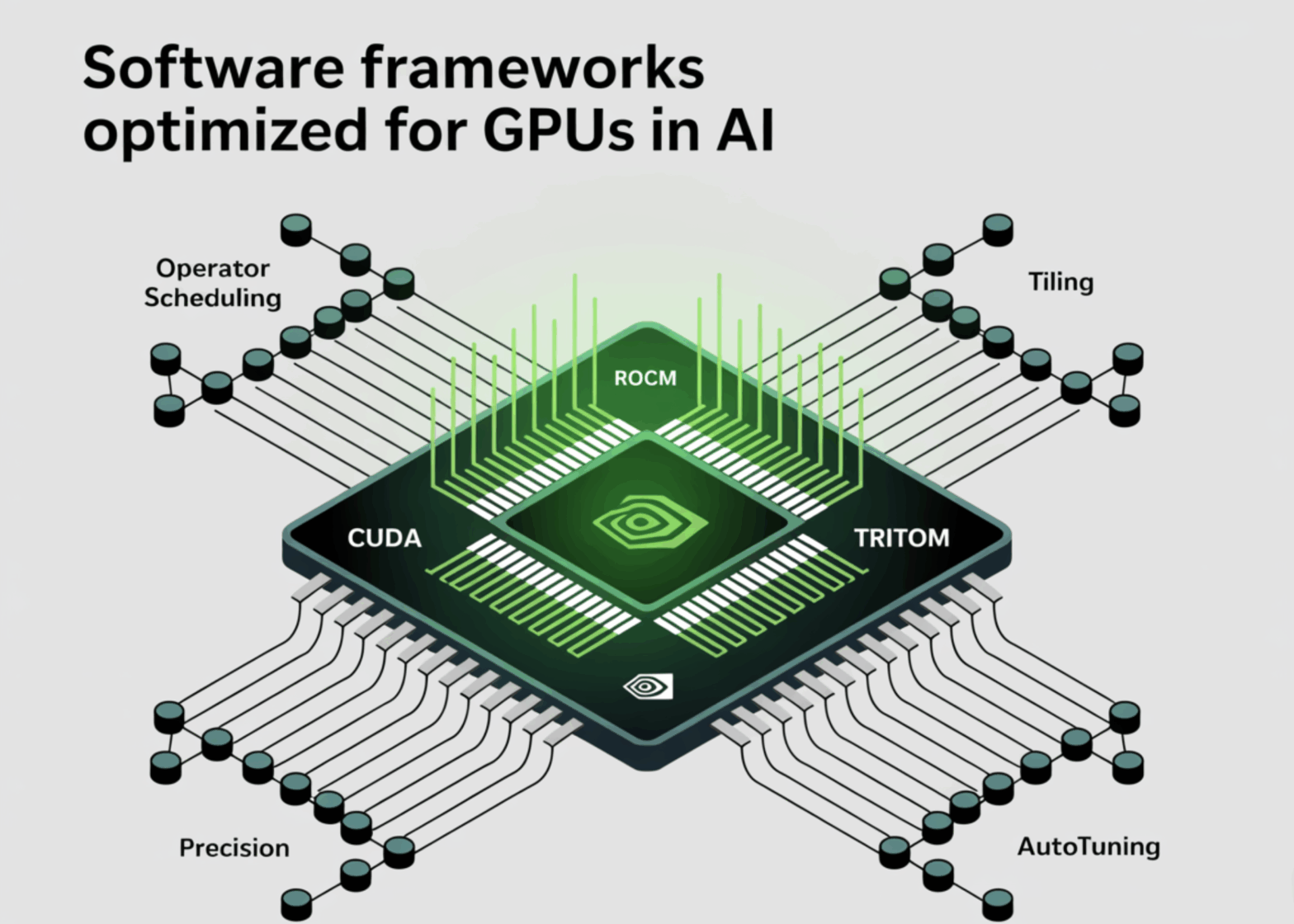

Depth-learning throughput depends on how effectively a compiler-stacking thorough programs for execution of GPU programs are mapped: thread/block time plans, memory movement and selection of instructions (e.g. tensor-kern-mMA pipelines). In this article we focus on four dominant stacks – Cuda, Rocm, Triton and Tensorrt – from the perspective of the compiler and explains what optimizations the needle move in practice.

What actually determines the performance of modern GPUs

The same levers occur among providers:

- Operator planning and fusion: Reduce the Kernel start and the return trip to HBM. Unlike longer producer → consumer chains for reuse of register/shared memory. Tensorrt and Cudnn “Runtime Fusion Engines” illustrate this for attention and convince blocks.

- Tile and data layout: Consistent tensor forms to Tensor Core/WGMMA/WMMA native fragment sizes; Avoid joint bank conflicts and partition camping. Cutlass documents Warp-Level Gemm tiles for both tensor core and for cuda cores.

- Precision & quantization: FP16/BF16/FP8 for training/inference; Int8/Int4 (calibrated or Qat) for the inference. The calibration and the core selection under these precisions automates.

- Graph Capture & Running Specialization: Diagram execution for amorting start -up care; Dynamic fusion of common undergraphs (e.g. attention). Cudnn 9 Additional graph support for attention fusion engines.

- Autotuning: Search tiled sizes, Unroll factors and pipelining depths per arch/sku. Triton and Cutlass interpret explicit autotune hooks; Tensorrt carries out a tactical selection of the builders.

With this lens, each stack implements the above.

Cuda: NVCC/PTXAS-, CUDNN, CUSLASS and CUDA-GRAPHEN

Compiler path. Cuda code created by NVCC Then in PTX Ptxas Lower PTX to Sass (Arch-specific machine code). Control optimization requires feeding flags for both host and device phases. For kernels is the key -Xptxas. Developers often miss this -O3 Alone only affects the host code.

Corporation generation & libraries.

- Cutlass Provides parametric templates for Gemm/CONV, implementation of jaws, tensor-kern-MMA pipelines and SMEM editors that were developed for conflict-free access-canonical references for writing peak core, including Hopper's WGMMA Away.

- Focus 9 Introduced term brokerage engines (especially for attention blocks), native Cuda -Graphintegration for these engines and updates for new calculation functions -Reduce motivated shipping effort and improvement of storage locality in transformer workloads.

Performance effects.

- The change of non -applied pytorch -OPS to Cudnn respectfully cuts kernel starts and global memory traffic. combined with Cuda graphicsIt reduces CPU bottlenecks in a short sequence inference.

- The alignment of tile shapes on WGMMA/tensor sizes on Hopper/Blackwell is crucial. Cutlass tutorials quantify how false tiles waste the tensor core throughput.

When Cuda is the right tool. You need maximum control over the selection, occupancy and smemchoreography for instructions. Or they expand cores beyond reporting beyond the library while they remain in the Nvidia GPUS.

ROCM: HIP/Clang Toolchain, Rocblas/Miopen and the 6thx series

Compiler path. ROCM used Sound/LLVM compil HIP (Cuda-like) in GCN/RDNA ISA. The 6thx series focused on the perf and framework reporting. Release-notes track component level optimizations and HW/OS support.

Libraries and kernels.

- Rocblas And Miopen Implement Gemm/Convince the primitive with ore knowledge and algorithm selection similar to the spirit like Kublas/Cudnn. The consolidated changelog emphasizes iterative perfers work in these libraries.

- The latest ROCM work stream includes better Triton Activation on the AMD GPUS, enables kernel authoring to be enabled at Pythone level and at the same time reduce LLVM to AMD bakers.

Performance effects.

- In the case of AMD GPUs, the bank width and vectorized global loads (shared memory) and the vectorized global loads to matrix tile forms are just as important as the SMEM bank orientation on Nvidia. Compiler-assisted fusion in frameworks (e.g. attention) plus library autotunation in Rocblas/Miopen typically concludes a large part of the gap to handwritten kernels that depend on architecture/drivers. Release documentation shows continuous tuner improvements in 6.0–6.4.x.

When ROCM is the right tool. You need native support and optimization for AMD accelerators, whereby the hip recidents of existing cereals in Cuda style and a clear LLVM toolchain.

Triton: A DSL and compiler for custom kernels

Compiler path. Triton is a DSL embedded in Python, the through the Llvm; It takes care of vectorization, storage coalescence and registration of the allocation and at the same time provides explicit control over block sizes and program IDs. Build documents show the LLVM dependency and the custom builds. The developer materials from Nvidia discuss Triton's coordination for newer architectures (e.g. Blackwell) with FP16/FP8 -Gemm improvements.

Optimizations.

- Autotunation About tiles,

num_warpsand pipelining steps; static Masking for boundary conditions without scalar fallbacks; shared memory Staging and software pipelining for overlapping global loads with compute. - Tritons design aims at this automate the error-prone parts of the optimization of the Cuda level, while leaving block-level tile decisions to the author; The original announcement explains this separation of concerns.

Performance effects.

- Triton seems if you need a merged, formal-specialized kernel-kernel library cover (e.g. tailor-made attention variants, normalization activation chains). In modern Nvidia parts, provider cooperation reports with architectural-specific improvements in the triton backend, which reduces the penalty compared to the Cutlass style for common Gemms.

When Triton is the right tool. You would like to write almost Cuda performance for custom-made fusioned OPS without Sass/WMMA, and you appreciate the Python-First-Easteration with Autotuning.

Tensorrt (and tensorrt-LLM): Builder-Time diagram optimization for inference

Compiler path. Tensorrt occupies onnx or framework diagrams and emits a hardware-specific one Motor. During the build it leads through Layer/tensorSorfusionPresent Precision calibration (Int8, FP8/FP16) and Kernel tactical selection; Best practice documents describe these architectural phases. Tensorrt-LLM extends this with LLM-specific term optimization.

Optimizations.

- Diagram level: Constant folding, corporate slice canonization, fusion of Konz-Bia's activation, attention fusion.

- Precision: After the training calibration (entropy/percentile/mse) and quantization per tensor as well as smooth quant/qat workflows in tensorrt-LLM.

- Duration: Paged-KV-Cache, batching in flight and planning for multi-stream/multi-GPU deployments (tensorrt-LLM documents).

Performance effects.

- The biggest victories usually come from: end-to-end US8 (or FP8 On Hopper/Blackwell, where supports), remove the frame above them via a single engine and an aggressive attention merger. Tensorrt's Builder creates Pro Arch engine plans to avoid generic kernel at runtime.

If it is the right tool. Production infection in the NVIDIA GPUS, in which you can prepare an optimized engine and benefit from the quantization and the large graph fusion.

Practical instructions: Selection and voices of the stack

- Training vs. Inference.

- Training/experimental cores → Cuda + Cutlass (NVIDIA) or ROCM + ROCBLAS/MIOPEN (AMD); Triton for custom merged ops.

- Production infection on Nvidia → Tensorrt/Tensorrt-LLM for global profits at diagram level.

- Exploit architecture native instructions.

- Make sure on Nvidia Hopper/Blackwell WGMA / WMMA Sizes; Cutlass materials show how GEMM and SMEM identators should be structured to Warp levels.

- Align the use and vector widths of the LDS on CU data ahasha on AMD; Use ROCM 6.X car tuner and triton-on-rocm for form specialized OPS.

- First merge, then quantize.

- Kernel/Graph Fusion reduces memory traffic; Quantization reduces the bandwidth and increases the math density. Tensorrt's Builder-Time Fusions Plus Int8/FP8 often delivers multiplicative profits.

- Use the diagram version for short sequences.

- CUDA diagrams that are integrated into Cudnn attention mergers, amortize the starting costs in auto -gray inference.

- Treat compiler flags as first class.

- For Cuda, think of the flags on the device: Example, example,

-Xptxas -O3,-v(And-Xptxas -O0in diagnosis). Only host-O3is not enough.

- For Cuda, think of the flags on the device: Example, example,

References:

Michal Sutter is a data scientist at a Master of Science in Data Science from the University of Padova. With a solid basis for statistical analysis, machine learning and data technology, Michal exposes Michal to convert complex data records into implementable knowledge.