What happens when organizations have to struggle with hidden inefficiencies in their memory infrastructures? An astonishing amount of energy, capital and human attention is wasted: every watt of force that is unnecessarily consumed, any rack unit that is filled with hardware not used, and spent parameter spent.

But it's not just wasted resources – it is the wasted opportunity. In the era of AI and hyper -connected cloud ecosystems, a basis for efficiency can determine whether your data strategy is successful under its own weight.

The inefficiency of the Legacy approach

For decades we have accepted a fundamentally incorrect model for storing companies. Arrays with SSDs, each containing their own processor, RAM, firmware and flash translation layer, required highly specialized engineers to manually configure parameters in order to compensate for performance, protection and capacity. This was associated every three to five years with regular maintenance of performance in uncoordinated maintenance tasks and disturbing upgrades.

It is like building a modern city in which each building operates its own power plant instead of connecting to a central network. Customers pay for these inefficiencies – not only for the acquisition costs, but also with rack space, power consumption, cooling requirements and operational complexity.

When we founded Pure Storage, we asked: What if we could remove these inefficiencies at the architectural level instead of compensating for them? We have questioned the assumption that each storage device needed its own intelligence. We asked if administrators should be forced to make complex RAID configuration decisions. We rejected the idea that efficiency must lead to the expense of performance or resilience.

The result: a storage platform that actually becomes more efficient when scaling. As well as.

Directflash: break free of SSD restrictions

We have a saying in Pure Storage: “Storage is not a capacity problem – it is a physics problem.” While it may seem contragulating – most memory discussions revolve around terabytes and petabytes – the statement is exactly right. The most profound innovations in memory is not about simply adding more capacities. It is about how we interact with the underlying physics of the media.

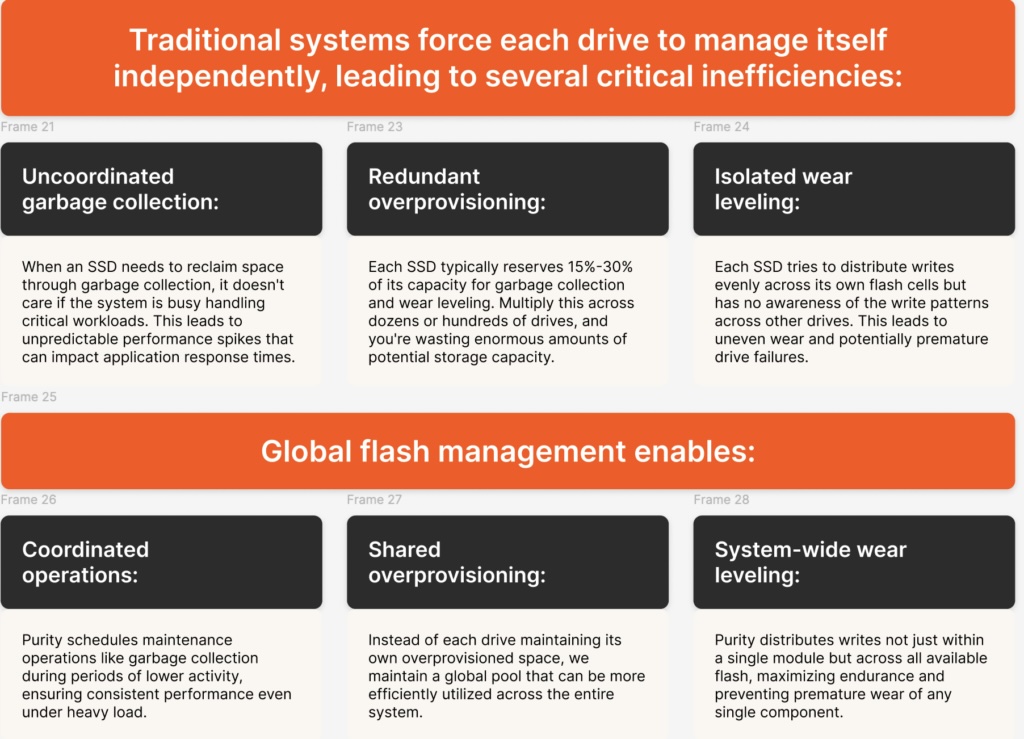

In conventional systems, each SSD contains its own flash translation layer (FTL) – software that manages how data are written and read in the physical flash cells. Each SSD makes decisions about the placement of data, the garbage collection and the wear leveling, without sharpening the awareness of what the system as a whole does.

The PURE Storage® Directflash® technology is fundamentally redefined by removing the conventional SSD controller architecture and enables direct access to raw-nand flash without embedded controllers or in on-board dram. This creates efficiency with:

- Elimination of redundant resources: In a conventional 24-drive system, you may have 24 controllers, 24 firmware sets and potentially Terabyte dram directly in the drives. Directflash eliminates this redundancy and lowers hardware costs and power consumption.

- Exercise visibility: Without the black box with SSDS, purity can recognize when cells programmed, deleted or read. It can also recognize the health and endurance of every region of Flash, so that we can make clever decisions impossible in traditional architecture.

Global flash management: the intelligence layer

Imagine that instead of individual traffic lights at every intersection, you had to make independent decisions, an intelligent system that could see all traffic patterns in an entire city and optimize the river worldwide. That is the difference between conventional SSDs and pure memory direct flash technology. Instead of optimizing individual components, we optimize the entire system through our global flash management of Purity -the intelligence that orchestrates as the data flow over the entire flash pool.

The result? Our customers usually see two to five times better endurance from the same flash compared to conventional approaches as well as a consistently lower and predictable latency.

Fully optimized data layout: protection without compromise

The third pillar of our efficiency approach is how we protect data. Traditional memory force administrators to make difficult compromises between performance, protection and capacity when configuring RAID. These decisions can either lead to inefficient use of resources or to impair protection.

These compromises disappear with pure memory. Pereitity automatically implements the optimal data protection scheme based on your specific configuration without the necessary manual coordination. This includes:

- Adaptive RAID schemes: The system automatically selects the most efficient RAID scheme based on the number and type of flash modules to ensure the perfect performance, protection and capacity efficiency.

- Variable strip sizes: In contrast to RAID implementations with a firm strike, purity dynamically sets the strip sizes in order to optimize for different workloads and data types.

- Intelligent conversion processes: If a component fails, purity only builds the data concerned, not the entire drives, the recovery times dramatically and minimizes the performance effects during recovery.

You will receive the highest level of data protection and maximize the usable capacity – all without administrators having to become RAID experts.

The composed economic advantages of extreme efficiency

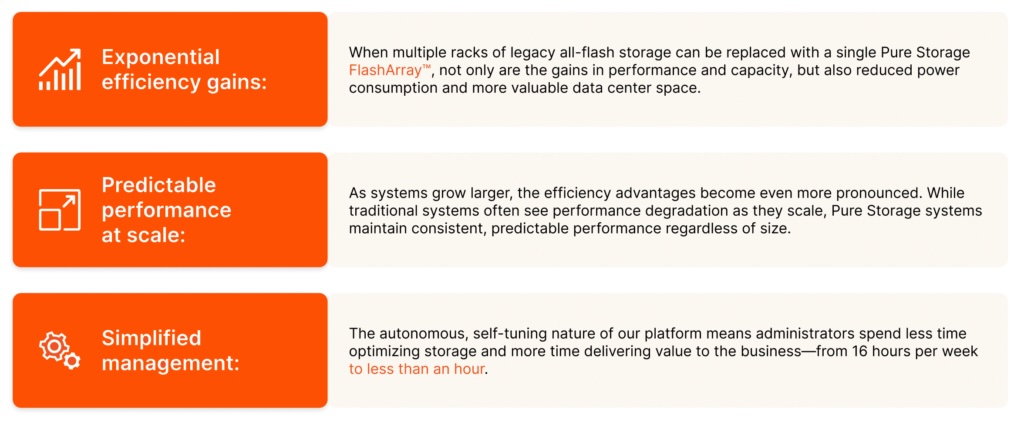

When the data volume grows, these three elements – direct flash, global flash management and optimized data layout – work together, where the economic advantages are added:

If inefficient storage is no longer a limiting factor, companies can do more without compromises. But it is more than just the immediate technical and economic advantages – it is how this foundation enables a really uniform corporate data platform.

Efficiency as the basis for company cloud data economy

The economy of Cloud models from companies depends on the storage efficiency. Why? If you remove inefficiencies at the memory level, create a basis for a uniform data experience in all workloads, deployment models and data types. The result is a platform that delivers extreme power density and at the same time drastically reduces management overhead, which restricts traditional storage.

By eliminating inefficiencies at the device level, implementing software-controlled global intelligence and automation of optimization, the pure storage platform enables real cloud-like efficiency to achieve workloads for all data.

The original article is Here.

The views and opinions expressed in this article are those of the author and do not necessarily reflect that of CDOTRENDS. Photo credits: iStockphoto/Anastasia Sudino; Diagrams: Reiner Speicher