Quantum points infuses a machine stay sensor with a superhuman adjustment speed.

Washington, July 1, 2025-in dazzling light light or pitch black darkness, our eyes can adjust to extreme lighting conditions within a few minutes. The human visual system, including the eyes, neurons and the brain, can also learn and notice settings to adapt faster if we come across similar lighting challenges the next time.

In an article that was published in applied physics letters by AIP Publishing this week, researchers from Fuzhou University in China used a machine stay sensor to use quantum points to adapt to extreme light changes in light that can be much faster than the human eye in about 40 seconds by imitating the key behavior of eyes. Your results could be a player for robotic perspective and autonomous vehicle security.

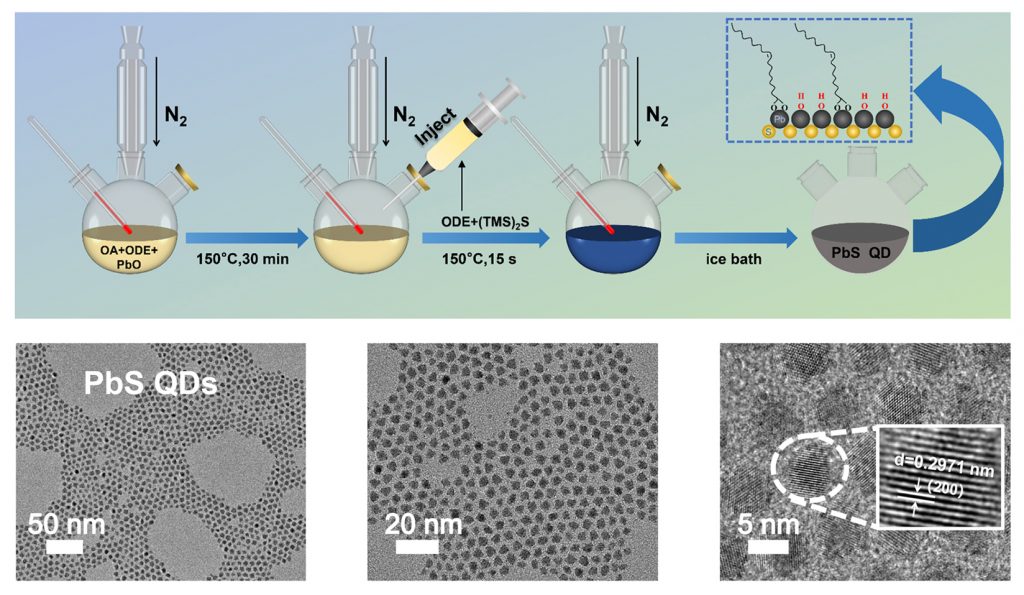

“Quantum dots are semiconductors into nanograpes that efficiently convert light into electrical signals,” said the author Yun Ye. “Our innovation is in technical quantum points to deliberately catch loads such as water in a sponge and then to leave them free-sensitive pigments for dark conditions if necessary.”

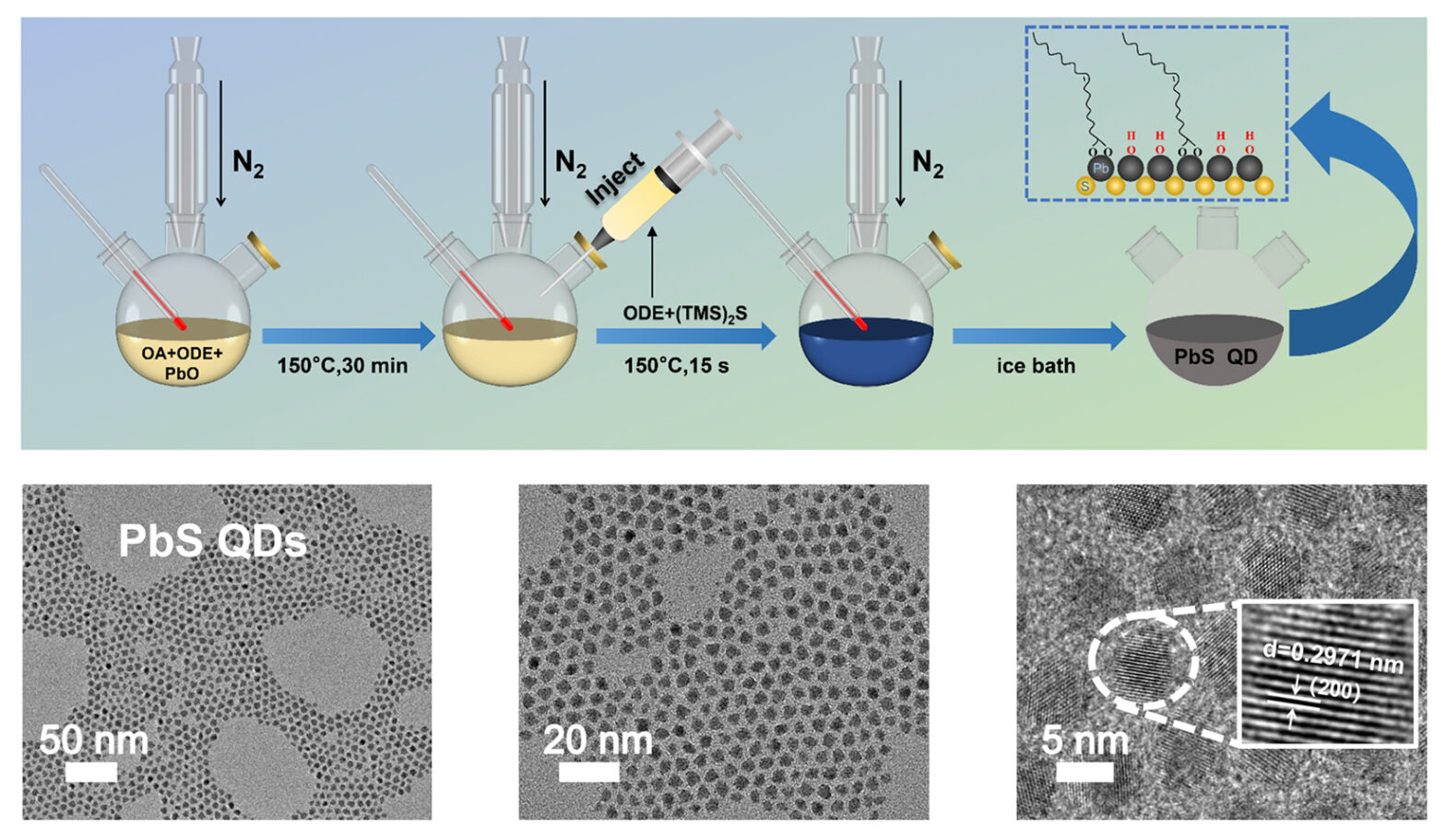

The fast adaptive speed of the sensor is based on its unique design: bleeding ulfide quantum points, which are embedded in polymer and zinc oxide layers. The device reacts dynamically by catching or releasing electrical loads depending on the lighting, similar to the eyes of the eyes to adapt to the darkness. Together with special electrodes, the layered design proved to be highly effective when replicating human vision and optimizing its light reactions for the best performance.

“The combination of quantum points that are sensitive to light nanomaterials and organic-inspired device structures enabled us to bridge neurosciences and engineering,” said Ye.

The device design is not only effective when it comes to dynamic adaptation to light and dark lighting, but also exceeds existing machine vision systems by reducing the large amount of redundant data generated by current viewing systems.

“Conventional systems process visual data indiscriminately, including irrelevant details that waste the waste of electricity and slow down the calculation,” said Ye. “Our sensor filters data on the source, similar to how our eyes concentrate on key objects, and our device preparations for easier information to reduce the calculation load just like the human retina.”

In the future, the research group plans to further improve their device with systems with larger sensor arrays and EDGE AI chips that carry out AI data processing directly on the sensor or use other intelligent devices in smart cars for further applicability during autonomous driving.

“Immediate uses for our device are in autonomous vehicles and robots that work under changing lighting conditions, such as tunnels to sunlight, but it could possibly inspire future visual systems with low performance,” said Ye. “Its core value is to see machines reliably where current visual sensors fail.”

###

Article title

A one in a row of structured bionic visual sensor for adaptive perception

Authors

Xing Lin, Zexi Lin, Wenxiao Zhao, Sheng XU, Enguo Chen, Tailiang Guo and Yun Ye

Authorities

Fuzhou University, Fujian Science and Technology Innovation Laboratory for Optoelectronic Information in China